Unraveling Emotions: Exploring Speech Emotion Recognition with LSTM Deep Learning Models

Speech Emotion Recognition using Deep Learning

Understanding human emotions is essential in today's technologically advanced world in a number of sectors, including psychology, customer service, and human-computer interface. Speech offers an interesting way to identify emotions since it is a rich source of emotional clues. In this blog, we explore the field of Speech Emotion Recognition (SER) with deep learning models, with an emphasis on the architecture of the Long Short-Term Memory (LSTM).

Understanding Speech Emotion Recognition (SER)

The task of automatically determining the emotional state provided by spoken language is called Speech Emotion Recognition (SER). Pitch, intensity, and spectral properties are only a few of the acoustic characteristics that can be used to infer different emotions from speech signals, including happiness, sorrow, anger, surprise, disgust, neutral, and fear.

The Role of Deep Learning in SER

Because deep learning techniques make it possible to create reliable and accurate emotion detection systems, the field of SER has undergone a revolution. The capacity of LSTM (Long Short-Term Memory) networks to accurately model sequential data has made them a popular choice among deep learning architectures. This makes them ideal for processing time-series data, such as speech signals.

How LSTM Works

Building an LSTM-based SER Model

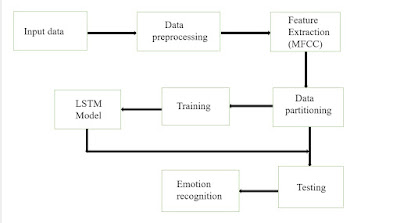

To develop an LSTM-based SER model, we follow these steps

1.Data Collection and Preprocessing: Compile a dataset of voice samples that have been assigned labels for the relevant emotion categories. Mel-frequency cepstral coefficients (MFCCs) and spectrograms are examples of pertinent features that can be extracted from the audio input to prepare it for further processing.

2.Model Architecture Design: Create a network design for LSTM that is specific to SER. For classification, the model could have several LSTM layers and then fully connected layers.

3.Training: Utilizing the preprocessed speech samples, train the LSTM model. By minimizing an appropriate loss function, the model learns to link input speech data to corresponding emotion labels during training.

4.Evaluation: Analyze the trained model's output with a different test dataset. Metrics like recall, accuracy, precision, and F1-score are frequently used to evaluate how well the model recognizes various emotions.

5.Fine-tuning and Optimization: To boost performance, adjust the model and optimize the hyperparameters. The model's generalization capacity can be improved by utilizing strategies like regularization, dropout, and learning rate scheduling.

Benefits in SER using Deep Learning

Deep learning for Speech Emotion Recognition (SER) provides a number of advantages by utilizing neural network capability to improve the precision, resilience, and effectiveness of emotion recognition systems. Here are a few main advantages:

1.Automatic Feature Learning: Without the need for laborious feature extraction, deep- learning models can automatically learn hierarchical representations of speech data from raw audio signals. This enables the model to pick up on intricate patterns and nuanced aspects of speech that would be difficult to identify using conventional feature engineering techniques.

2.End-to-End Modeling: End-to-end modeling of the emotion detection pipeline, from raw input (audio waveform) to output (emotion label), is made possible using deep learning. With this all-encompassing method, feature extraction, representation learning, and classification can be seamlessly integrated into a single neural network design, streamlining the modeling process and possibly enhancing performance.

3.Better Generalization: Rich and abstract representations of speech data can be learned using deep learning models, and these representations can then be effectively generalized to samples that are not seen or are not in the distribution. This is especially useful in situations where speech signals in the actual world could vary in terms of accent, intonation, background noise, and emotional expression.

4.Temporal Dynamics Modeling: Recurrent neural network (RNN) designs, such as Gated Recurrent Unit (GRU) and Long Short-Term Memory (LSTM), are excellent choices for simulating temporal dependencies in sequential data, such as speech. These models improve capacity to identify minute shifts in emotional states by capturing long-range dependencies and contextual information throughout time.

5.Multimodal Integration: Deep learning makes it easier to integrate multimodal data— such as speech, facial expressions, and physiological signals—for more accurate and contextually rich emotion recognition. Deep learning models can leverage complementing signals and enhance overall performance by merging various modalities, particularly in difficult settings where emotion cues are unclear or contradicting.

6.Scalability and Efficiency: Scalable deep learning frameworks and architectures may be effectively taught on large-scale datasets with the use of parallel computing resources, such as GPUs and TPUs. Additionally, resource-constrained devices can be used to deploy deep learning-based SER systems thanks to streamlined implementations and model compression approaches, which qualifies them for edge computing and real-time applications.

Architecture Diagram

Comments

Post a Comment